Measuring AI's Creativity with Visual Word Puzzles

How well can AI models solve (and create) rebus puzzles?

Introduction

What does it mean for an AI to be creative?

Last year, I wrote an article about measuring creativity in Large Language Models (LLMs) using several word-based creativity tests.

Since then, AI has developed rapidly and is capable of processing and creating both text and image. These models, sometimes referred to as “Multimodal Large Language Models” (MLLMs), are extremely powerful and have advanced abilities to understand complex textual and visual inputs.

In this article, I explore one way to measure creativity in two of popular MLLMs: OpenAI’s GPT-4 Vision and Google’s Gemini Pro Vision. I use rebus puzzles, which are word puzzles that require combining both visual and language cues to solve.

Creativity is extremely multi-faceted and difficult to define as a single trait. Therefore, in this article, I aim not to measure creativity in general, but to evaluate one very specific aspect of creativity.

Note [modified from my earlier article]: These experiments aim not to measure how creative AI models are, but rather to measure the level of creative process present in their model generations. I am not claiming that AI models possess creative thinking in the same way humans do. Rather, I aim to show how the models respond to particular measures of creative processes.

Rebus Puzzles

A rebus puzzle is a picture representation of common words or phrases. They often involve a combination of visual and spatial cues. For example, below are six examples of rebus puzzles (answers are at the end of the article1).

Rebus puzzles are great for evaluating models with, because while they require some level of cleverness and creativity to solve, they have a “right answer”.

You might have come across rebus puzzles in your childhood (for me, these were fun puzzles I did in middle school after exams). However, rebuses are not an entirely modern invention! They’ve been used throughout history, from Leonardo da Vinci to Voltaire.

In order to solve rebus puzzles, you really have to ↓

This is why rebus puzzles are a great way to understand AI models’ understanding of text, images, and the creative connections between them. Solving a rebus puzzle requires knowledge of language and wordplay where oftentimes the visual layout is also important.

Dataset of rebus puzzles

I used a dataset of 84 rebus puzzles from Normative Data for 84 UK English Rebus Puzzles2, a 2018 study published in Frontiers in Psychology, which studies the ability of 170 study participants (with an age range of 19 to 70 years) to solve rebus puzzles. These are copyright-free puzzles selected from the internet containing familiar UK English phrases.

The study categorized the puzzles into six types (see the images above). Some categories were more difficult to solve than others — for example, (1) “Word over word” puzzles tended to have a higher success rate than (3) “Word within word” puzzles.

Puzzle difficulty level

The difficulty of each rebus puzzle varied. The original paper provided data on the success rate of human participants in solving a rebus puzzle. The average success rate score was about 50% (with a median of 47%). Some puzzles had over a 95% success rate and others had a less than 5% success rate.

I mapped each problem to 4 difficulty scores (Easy, Medium, Hard, Extra Hard) based on the scores.3 I labeled puzzles the study participants solved with a higher success rate as “Easy” and the ones they struggled with as “Extra Hard”.

Below is an example for each of the categories:

Answers are at the end of the article.4

Experimental setup

I took the same rebus puzzles from the UK English Rebus Puzzles dataset and evaluated two closed-source and publicly available multimodal LLMs, OpenAI’s GPT-4 Vision and Google’s Gemini Pro Vision, on their ability to solve the puzzles. (I also evaluated the open-source LLaVA model, but it wasn’t able to solve a single puzzle correctly — I will explain this more in the Discussion section.)

For each model, I experimented with using two setups:

Zero-shot = Providing a model with a single rebus and having it predict what phrase it represents.

Few-shot = Providing a model with three “practice” puzzles before having it predict what phrase a rebus represents. These “practice” puzzles were the same ones provided to the the human participants in the original study.

Zero-shot and few-shot simulate how well models (or humans) perform on tasks if they’re given examples to learn from beforehand or not.

Results

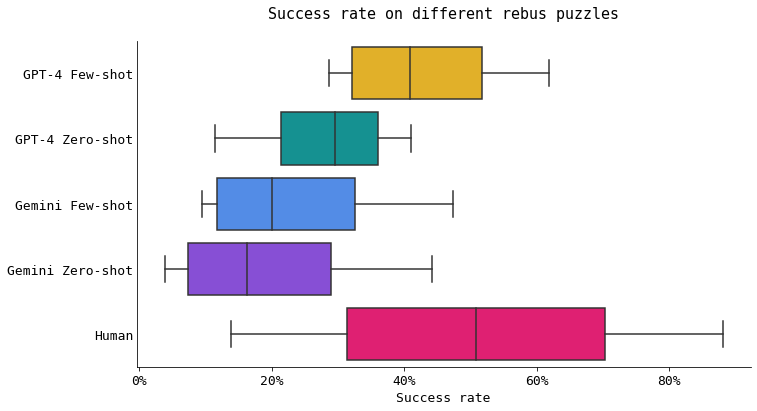

GPT-4 using few-shot is the best model at solving rebus puzzles, but humans are better

On average, all of the models were worse at solving rebus puzzles than humans were. GPT-4 with few-shot prompting was the best model at solving the puzzles. Adapting the prompting method from zero-shot to few-shot improved GPT-4’s success rate by a great deal. That is, giving practice puzzles to GPT-4, rather than having it solve a rebus puzzle without practice puzzles, improved its performance.

However, for Gemini, there was little difference in using zero-shot vs. few-shot for solving the puzzles. For Gemini, its success rate didn’t differ much whether or not it was given practice puzzles.

Puzzles that are easy for humans are more difficult for models

This heat map shows the average success rate for each model and rebus difficulty level. For easy puzzles, none of the models (on average) were close to reaching human level. Humans were able to solve 88% of “Easy” rebus puzzles, whereas the models were about to solve (on average) fewer than 50% of those same puzzles.

Here are two examples of puzzles that 90% of human participants solved correctly, but the models struggled to solve. For example, incorrect answers by models for “long overdue” included “overdue” (without the long). Incorrect answers by models for “wave goodbye” included “wavy goodbye” and “goodbye within the box”, as well as just “goodbye” (without the wavy).

Puzzles that are difficult for humans are less hard for (some) models

For medium difficulty puzzles, GPT-4 with few-shot was nearly on par with human level. For extra hard difficulty puzzles, the success rate of GPT-4 with few-shot was much higher than that of humans.

This means that GPT-4 with few-shot was able to figure out some of the more difficult or arcane puzzles that humans really struggled to solve.

Here are two examples of puzzles that only 1/84 human participants were able to solve, but GPT-4 with few-shot was able to solve correctly!

Usually, few-shot > zero-shot, except for Gemini on a subset of puzzles

In general, using few-shot yielded higher success rates than using zero-shot. This makes sense — this is the difference between giving a model several examples before having it solve a puzzle, vs. giving it no examples before solving a puzzle.

However, this is not the case for Gemini, particularly on “Hard” difficulty puzzles. For some unknown reason, for this particular subset of problems, Gemini was able to solve puzzles at a nearly twice as high success rate for zero-shot vs. few-shot.

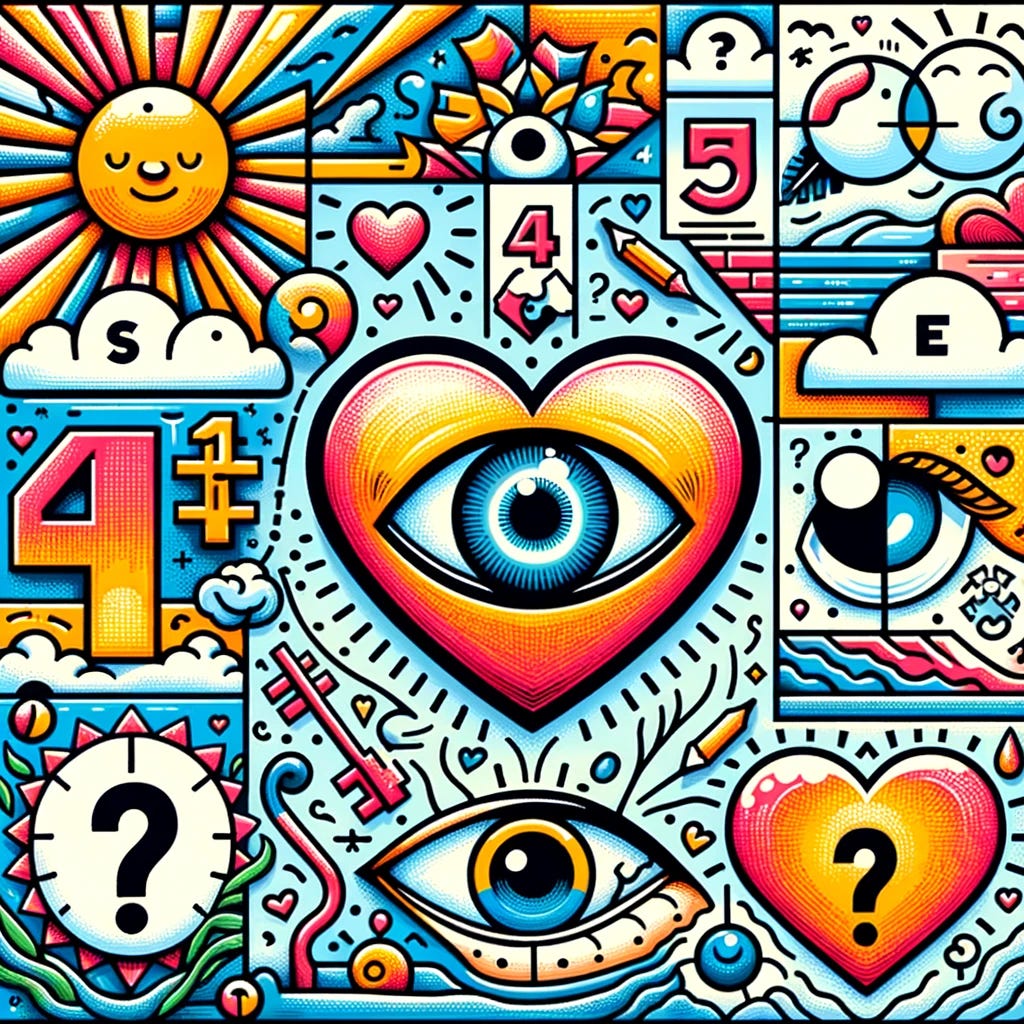

Generating rebuses

So far, I evaluated GPT-4’s and Gemini’s abilities of solving existing rebus puzzles. But how good are they at creating new rebus puzzles?

You can decide for yourself. Here are a few examples:5

GPT-4 attempt #1: “Love for eye”

Heart Symbol: Often represents the word "love."

Number 4: This is simply "four."

Eye Illustration: Represents the word "eye."

When you combine these elements in the order they appear, the phrase or idiom they suggest is "Love for eye".

— GPT-4

GPT-4’s attempt #2: “I love you”

The eye symbolizes "I", the heart symbolizes "love", and the sheep, which sounds like "ewe", is a homophone for "you". So, when you put it all together, the phrase is "I love you".

— GPT-4

GPT-4 attempt #3: “Be happy”

Gemini attempt #1: “Sonic boom”

The sun and cactus represent "son" and "cactus," which together sound like "sonic boom."

— Gemini

Gemini attempt #2: “I see the light”

The answer to the rebus puzzle is "I see the light".

The sun represents "I"

The bitten apple represents "see"

The bird represents "the"

The rainbow represents "light"

— Gemini

Gemini attempt #3: “Sunbite”

Sun + bite + U = Sunbite

— Gemini

Discussion

Gemini vs GPT-4: how did they perform?

In general, both Gemini and GPT-4 were not bad at solving rebus puzzles. While on average they were worse than humans, both models were able to solve more difficult puzzles that humans were not able to solve.

GPT-4 was better at solving rebus puzzles overall. Using few-shot prompting showed a huge improvement in success rate compared to zero-shot.

Gemini was better at solving a subset of the rebus puzzles. For Gemini, using few-shot did not show much improvement in success rate compared to zero-shot.

The possibility of having memorized certain rebus puzzles

All of the rebus puzzles used in the study were sourced from the Internet, so it’s very possible that both GPT-4 and Gemini saw some proportion of those rebuses in their training data. This might also explain why GPT-4 was able to solve a high rate of extra hard problems that human struggled with is that some of those images were perhaps seen in its training data.

One way to solve this is using a dataset such as the REBUS dataset, which contains over 300 hand-curated, original rebus puzzles (e.g. GPT-4 and Gemini has not seen these in their training data … yet …). However, the REBUS dataset does not have a human benchmark to compare model performance on the puzzles against, making it difficult to determine if the models are struggle to solve certain puzzles because the models aren’t capable, or if humans would have equally struggled with those puzzles.

It’s a lot easier to solve rebus puzzles than to create them

Maybe it’s just me, but the rebus puzzles generated by both GPT-4 and Gemini didn’t really make much sense. To me, it felt like the models were going through the motions of creating a rebus puzzle (such as describing each object in the generated image) without fully tying the components of the puzzle together in a way that made sense.

Neither model was able to fully make a puzzle that made both visual and verbal sense.

(As a side note, Gemini really liked including images of the sun in the rebus puzzles it generated.)

What about open source models?

I prompted LLaVA (Large Language and Visual Assistant), an open-source multimodal language model, on the 84 rebus puzzle dataset. It was not able to correctly solve a single puzzle!

This really showed me that open-source models are still a little behind, at least in this aspect of multimodal creativity, compared to the closed-source models. I hope in the future, as open-source models improve, their capability to create funky and arcane rebus puzzles will emerge.

Measuring creativity in models (and humans) is not going to be a single test

There is still a long way to go to understand creativity — not just in models, but also in humans. While there exist a slew of tests to evaluate language, visual, and spatial creativity, it is important to look beyond these to think about what other methods exist to understand models’ creative or clever behaviors.

Rebuses (and word puzzles in general) are fun because they require skillsets not always required in a day-to-day setting (at least, not in an obvious way — maybe I need to be including more cryptic rebuses in my work emails).

Here is a set of a cryptic rebus puzzles generated by Gemini meant to represent a very normal phrase (see the caption for the phrase). Whatever is going on inside the neural networks of these AI models … it’s definitely not boring.

Fun facts

Historically, Voltaire and Frederick the Great exchanged the following rebus puzzle to ask about dinner plans.6

Rebuses were also popular in Edo-period Japan (known as hanjimono). Rebuses are still used in corporate and product logos.

A rebus for the names of Japanese provinces, from around 1800, sourced from Wikipedia. There is a nice guide on how to solve rebus puzzles

Rebus Collection is an amazing collection of rebuses used throughout history. For example, a farmer’s love letter from 1909 Iowa.

Enjoyed what you read? Feel free to leave a comment … what are some weird rebuses you can get the AI models to generate?

Citation

For attribution in academic contexts or books, please cite this work as

Yennie Jun, "Measuring AI's Creativity with Visual Word Puzzles", Art Fish Intelligence, 2024.@article{Jun2024airebus,

author = {Yennie Jun},

title = {Measuring AI's Creativity with Visual Word Puzzles},

journal = {Art Fish Intelligence},

year = {2024},

howpublished = {\url{https://www.artfish.ai/p/measuring-ais-creativity-with-visual},

}(1) Feeling on top of the world; (2) Try to understand; (3) Foot in the door; (4) Four wheel drive; (5) Half-hearted; (6) Parallel bars

Threadgold, E., Marsh, J. E., & Ball, L. J. (2018). Normative data for 84 UK English rebus puzzles. Frontiers in Psychology, 9, 2513.

I took the original distribution of the human participants’ success rates and split them into 4 buckets based on quantiles (so, each bucket had an equal number of rebus puzzles). Based on the quantiles, the discrete categories map to the following ranges:

Easy (78.82, 95.29]

Medium (47.06, 78.82]

Hard (25.002, 47.06]

Extra hard (1.179, 25.002] (Easy) Man overboard; (Medium) Too little, too late; (Hard) Forgive and forget; (Extra hard) Reading between the lines

For both GPT-4 and Gemini, I used the prompt: Create me an image of a rebus puzzle and explain it.

Gemini: https://gemini.google.com/

GPT-4: https://chat.openai.com/

Danesi, Marcel (2002). The Puzzle Instinct: The Meaning of Puzzles in Human Life (1st ed.). Indiana, USA: Indiana University Press. p. 61. ISBN 0253217083.

Great post! I love the cryptic generations...