Exploring Creativity in Large Language Models: From GPT-2 to GPT-4

Analyzing the evolution of creative processes in large language models through creativity tests

In recent weeks, people have used large language models (LLMs) to generate a variety of creative content, such as books, flash fiction, rap battles, and music chords. But is it possible to measure the level of creative process more broadly in these models?

Human creativity has long fascinated psychologists and researchers. Starting in the 1950s, researchers created a series of tests to compare the creative performance and potential of individuals. While no single test can fully capture creativity, these tests attempted to measure and quantify different aspects of it.

In this article, I analyze the performance of GPT models from 2019 to 2023 on tests that measure two kinds of creativity: convergent (a single, correct solution exists) and divergent (open ended; multiple solutions may exist).1 The tests include:

Connecting three seemingly unrelated words with a fourth word (Remote Associates Test)

Producing as many alternative uses for everyday objects (Alternate Uses Task)

Naming ten nouns that differ as much as possible from each other (Divergent Association Task)

Through these analyses, I focus on the change in performance from the earlier GPT-2 model all the way to the newest GPT-4 model. I use both a subjective and objective lens to understand the behavior of these models. In general, the later models tend to perform better on all of the creativity tasks, although I do find a few exceptions where, when the test has a “correct” answer”, the simpler GPT-2 model outperforms the newer GPT4 model. When possible, I compare the GPT models to human performances on the same creativity tests.

Note: These experiments aim not to measure how creative the models are, but rather to measure the level of creative process present in the model generations. I am not claiming that the GPT models, or LLMs in general, possess creative thinking in the same way humans do. Rather, I aim to show how the models respond to particular measures of creative processes.

A Progression of GPT Models

Generative Pre-trained Transformer (GPT) models are language models trained on vast portions of the Internet to predict the next token based on previous tokens. While the recent ChatGPT has captured the public’s attention in the last few months, OpenAI’s GPT models (and the technologies behind the models) have been around for a few years.

GPT-1 (2018, 117M parameters). The original GPT model. I used the open-sourced version on HuggingFace. However, the outputs were so gibberish so I did not include any of them in this article.

Paper: Improving Language Understanding by Generative Pre-Training

GPT-2 (2019, 1.5B parameters). I used the open-soured version on HuggingFace. For some experiments, GPT-2 outputs were gibberish and not useful for understanding its behavior, so I did not include those in the respective analyses. Paper: Language Models are Unsupervised Multitask Learners.

GPT-3 (2020, 175B parameters). Over 100 times bigger than GPT-2. I used text-davinci-002 from the OpenAI APIs.

Paper: Language Models are Few-Shot LearnersGPT-3.5 (2022). While there are multiple versions of GPT-3.5, I use the same model underlying the original ChatGPT, which was trained using reinforcement learning with human feedback. I used gpt-3.5-turbo from the OpenAI APIs.

GPT-4 (2023, ~100T parameters). I used gpt-4 from the OpenAI APIs.

Paper: GPT-4 Technical Report.

For all of the experiments in this article, unless otherwise mentioned, I called each GPT model five times per unique prompt to account for randomness in its generations.

1. Remote Associates Test: Connecting Unrelated Words

The Remote Associates Test (RAT) is a creativity test developed by Martha Mednick in 1962.2 This test measures convergent thinking, meaning that each question has a “right” answer.

Given three seemingly unrelated words, the test taker must find a fourth word connecting the three words. For example, the words "dream", "break", and "light" can be linked by the word "day" (daydream, daybreak, daylight). The words “sense”, “courtesy”, and “place” can be linked by the word “common”.

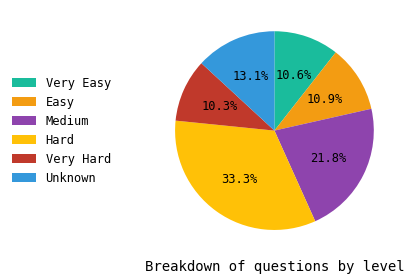

I collected the word triplets from the Remote Associates Test website. There were a total of 312 questions. The difficulty of each question was rated on a scale from Very Easy to Very Hard.

For each model and each triplet of words, I used the following prompt template. I called each model 5 times to account for randomness.

You are given three words. Think of a fourth word that is somehow related to each of the first three words. First give the answer ("Answer") then explain your reasoning ("Reason").

Words: {Word1}, {Word2}, {Word3}Newer GPT models score higher on remote association

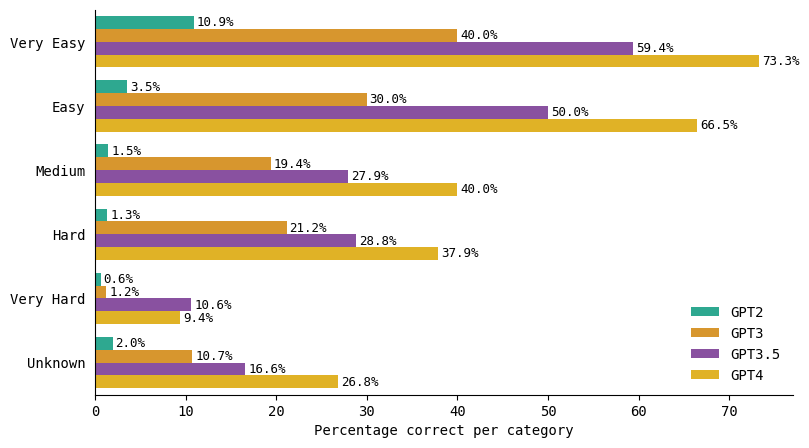

I calculated the overall percent of questions each GPT model generated a correct answer for. It may come as no surprise that the the newer the model, the more correct answers it generated.

Breaking down model performance by question difficulty level showed a similar trend: newer GPT models performed better than older ones on each difficulty level. And while the newer GPT models performed better than the older ones on hard questions, the improvement was less drastic than for easier questions. This means that even GPT-4 struggled on difficult questions.

According to the Remote Associates Test website, “A typical person can solve most of the items marked as easy, about half of the medium ones, and few of the hard ones.” There were no official statistics shared on the website, but a quick glance would show that GPT-4 did indeed solve around half of the medium ones and a few of the hard ones, making it the only model approximately comparable to human level. The other GPT models were worse than human performance.

A few exceptions: when GPT-2 gets it right but GPT-4 gets it wrong

While it didn’t happen often, there were a few questions for which the more rudimentary GPT-2 model from 2019 was able to generate the correct answer but the GPT-4 model from 2023 was not able to. In fact, there were 8 such questions. (The full list is shown in the Appendix at the end of the article).

An easy question

Words: cottage / brick / cake

GPT-2: “cheese” [correct answer]

GPT-4: “layer”

A medium question

Words: cat / number / phone

GPT-2: “call”

GPT-4: “dial”

A hard question

Words: through / disk / way

GPT-2: “drive” [correct]

GPT-4: “circle”

A very hard question

Words: land / hand / house

GPT-2: “farm” [correct]

GPT-4: “property”

Notice that for all of these questions, GPT-4’s answers are not unreasonable. In fact, without knowing the correct answer beforehand, you might assume that GPT-4’s answer could as likely connect the three words. If you’re thinking that, you’re not wrong. I’ll address this in the next section.

Was GPT-4 wrong or just thinking outside of the box?

GPT-4 answered more than half of the questions incorrectly, but maybe with good reason. I asked GPT-4 to explain each of its answers. Then, I looked at its explanations for a few of the “Very Hard” questions that it got wrong.

Words: artist / hatch / route

Correct answer: “escape”

GPT-4 answer: “map”

The word "artist" might bring to mind the idea of creating something, perhaps even a visual representation of something. Maps are a common form of visual representation that require a degree of artistic skill to create. The word "hatch" could refer to the act of opening something or bringing something into existence. A map is a type of blueprint or plan, often used to navigate new territory or chart out a course of action. The word "route" is related to direction and movement, which can be plotted and tracked on a map. A map can help you determine the best route to take when traveling, and can also provide information about the routes others have taken in the past. All in all, "Map" seems to be a word that fits well with each of the three initial words, as it encapsulates the ideas of artistic creation, planning and direction, and movement and navigation.

GPT-4’s explanation for why “map” is a good word to associate with “artist / hatch / route” is convincing.

Here is another example:

Words: fork / dark / man

Correct answer: “pitch”

GPT-4 answer: “shadow”

The word "fork" can be associated with division or branching, such as a fork in the road. Shadows can also be seen as a type of division, as they represent the absence of light. The word "dark" refers to the absence of light. Shadows are created when light is blocked by an object, and can often appear darker than the surrounding area. The word "man" can be associated with the concept of identity or individuality. Shadows can also be used to represent the identity of a person, as they can provide clues about their shape and size. Therefore, "Shadow" seems to be a word that fits well with each of the initial three words, as it encapsulates the ideas of division, absence, and identity.

These examples show the pitfall of the Remote Associates Test, and of convergent creativity tests in general — that is, when the “correct” answer may not be the only “right” answer. In these cases, GPT-4 generated an answer that was “wrong”, but only because it did not meet the conventional “correct” answer.

In fact, if I ask GPT-4 to brainstorm multiple options for a fourth word for a triplet of words, it is able to give me multiple options with convincing answers.

GPT-4 answers arbitrarily generated triplet of words

It is possible that the GPT models may have seen some of the above Remote Associates Test questions in its training data. How would GPT-4 respond to an arbitrarily generated triplet of words? In this section, I construct my own “remote association” test and evaluate the outputs subjectively.

I took a list of the 10,000 most common English words.3 Then I randomly sampled three words from this list and had GPT-4 provide a fourth word tying these words together. Some of the three-word combinations were quite strange and unrelated, but GPT-4 always found a way to tie them together. I encourage the reader to try these first before reading GPT-4's generations!

After viewing the next few examples, you can make your decision — is GPT-4 thinking outside of the box? Or is it just shoehorning its answers? (A longer list of examples is shown in the Appendix at the end of the article).

⛑️ Health

This response read as quite convincing. I found it interesting that the definition for “health surveillance” was copied almost verbatim from the CDC website on Introduction to Public Health Surveillance.

Public health surveillance is “the ongoing, systematic collection, analysis, and interpretation of health-related data essential to planning, implementation, and evaluation of public health practice.” — Field Epidemiology

(from the CDC website)

Words: dietary / surveillance / born

Answer: Health

Reason:

1. Dietary - A person's diet is directly related to their health, as it can affect their overall well-being, energy levels, and risk for certain diseases.

2. Surveillance - Health surveillance is the ongoing, systematic collection, analysis, and interpretation of data essential to the planning, implementation, and evaluation of health practice.

3. Born - When a person is born, their health is of utmost importance, as it can have long-lasting effects on their development, growth, and overall quality of life.🍀 Clovers

I was initially convinced by GPT-4’s answer — in fact, it felt quite clever. That is, until I did a quick Google search and found out that Trifolium duodecim is not real. This is an example of a hallucination, a common phenomena in which LLMs make up an answer that is not factually correct.

Words: lucky / field / twelve

Answer: Clover

Reason:

1. Lucky - A four-leaf clover is considered a symbol of good luck.

2. Field - Clovers are plants that can be commonly found growing in fields.

3. Twelve - There is a variety of clover known as "Trifolium duodecim" (Twelve-leaf clover), which has 12 leaflets.☕️ Starbucks

This example was my favorite the answer, while thinking out of the box, felt like kind of a stretch. And it was a little bit funny (especially the part about Patrick).

Words: grande / cognitive / patrick

Answer: Starbucks

Reason:

1. Grande: Starbucks uses the term "grande" to refer to one of its medium-sized drinks.

2. Cognitive: Starbucks is a popular place for people to go to relax, study, and work, which are all activities that require cognitive function.

3. Patrick: Patrick Dempsey, the actor who played Dr. Derek Shepherd on the television show Grey's Anatomy, is known for his love of coffee and was nicknamed "McDreamy." Starbucks is a well-known coffee shop, so it's possible that his character would have frequented Starbucks.These examples and analyses illustrate that creativity tests with a single correct answer might be limited. This limitation is addressed in the other tests explored in this article in the next sections.

2. Alternate Uses Task: Finding Meaning in Ordinary Items

Another popular test for measuring creativity is the Alternative Uses Task (AUT), which was created by J. P. Guilford in 1967. Unlike the Remote Associations Test, this test measures divergent thinking, meaning that there is no right answer.

The test taker is asked to produce as many original uses for an everyday object as possible. For example, a “laptop” can be used as a “plate”, as a “body shield”, or as “wall art”.4 Responses to this test are measured in terms of metrics such as originality (how different the uses are) and flexibility (the assortment of ideas). The purpose of this test is to see how many unconventional uses a person can think of for an ordinary object.

However, due to my limited resources, I did not have the ability to generate these measures without human annotators. Therefore, I used a proxy for creativity, which was also used by researchers from the University of Amsterdam in Putting GPT-3’s Creativity to the (Alternative Uses) Test.

In this paper, the researchers asked both humans and GPT-3 (davinci-002) to come up with alternate use cases for the following common objects: fork, tin can, and book. As a proxy for gauging creativity, they measured the semantic distance between the object and the generated use cases — that is, how different is the generated use case word from the original object?

I obtained the human responses from the paper’s project website for comparison with human performance. I used the following prompt for the GPT models, based on a similar prompt used int he paper.

Come up with 10 creative uses for the following item that might strike people as clever, unusual, interesting, uncommon, humorous, innovative, or different: {item}Cosine Distance between embeddings as proxy for creativity

In natural language processing, semantic distance (or semantic similarity) is a proxy for how related two words are, based on how often words are used together in similar contexts. For example, the words “computer” and “screen” might have a shorter semantic distance, because they are often used in the same contexts, whereas the words “computer” and “lentils” might have a greater semantic distance, because they are not often used in the same contexts.

To compute the semantic distance of two words, I used the same approach as used by the researchers in the paper mentioned above. I used Spacy (`en_core_web_lg`), a natural language processing library, to calculate word embeddings for the object (e.g. “laptop”) and the use case word (e.g. “plate”). Then, I used cosine distance between the two embeddings to calculate the semantic distance.

For the Alternate Uses Task, larger semantic distances correlated with “more creative” use cases for objects. The results showed that for the three objects (fork, tin can, and book), human performance reliably resulted in higher cosine distances (and therefore higher semantic dissimilarity) than for GPT-3. However, there were cases when GPT-3.5 and/or GPT-4 matched or surpassed human creativity semantic distance scores.

While humans tended to come up with more creative use cases than GPT-3 (using semantic distance, as calculated by cosine distance, as a proxy for creativity), GPT-4 was more on par with human level. That is, GPT-4 responded with items resulting in larger semantic distances with the original item, at a similar level to human performance. However, without actual humans annotating and deciding more subjective categories such as originality, flexibility, and usefulness, this quantification using cosine distance is limited as it only measures a small part of the GPT models’ creative outputs.

Can you distinguish human-generated vs. AI-generated use cases?

One of the lists shows the human-generated alternate use cases for a tin can and the other shows alternate use cases generated by GPT-4. Can you tell the difference? The answer is at the end.

List 1: Uses for tin can

as a mirror

to create toys

as art

as a reminder of Andy Warhol

as a key ring with the clip from the can

as jewelry

List 2: Uses for tin can

miniature drum set

quirky plant pot

impromptu cookie cutter

homemade camp stove

whimsical wind chimes

miniature herb garden

The next test in the following section also measures divergent thinking.

3. Divergent Association Task: Ten Words, No Connections

The third creativity test is the Divergent Association Task (DAT), created more recently in 2021 by a group of researchers.5 This test involves naming ten nouns that differ from each other as much as possible. This test, as evident by its name, is divergent and also has no correct answers.

There is a version of the test online on this website. It only takes a few minutes to take, as it involves coming up with a list of ten nouns as unrelated as possible. Here’s my result from the website:

The DAT Score

How is the score calculated? The authors describe their process here. The DAT score is calculated using embeddings and cosine distance. I adapt6 their process for calculating the score:

Calculate GloVe embeddings for each word

Calculate the cosine distance between each pair of words

Take the average of that value

This process is similar to the method used to calculate creativity proxy score in the Alternate Uses Task, with the only difference being the word embedding model used.

Comparing Divergence among models

The following prompt, which I use to prompt the GPT models, is taken from the DAT website.

Please enter 10 words that are as different from each other as possible, in all meanings and uses of the words.

Rules

- Only single words in English.

- Only nouns (e.g., things, objects, concepts).

- No proper nouns (e.g., no specific people or places).

- No specialized vocabulary (e.g., no technical terms).

- Think of the words on your own (e.g., do not just look at objects in your surroundings).I did not include GPT-2 in the results below, as the outputs generated by GPT-2 were gibberish. (You can see an example of this in the Appendix at the end of the article).

The results show that the DAT score increased for each newer model. This means that later models were better at generating 10 words semantically different from each other as possible.

For example, the following list generated by GPT-3 had a score of 0.36:

dusk, night, day, dawn, morning, afternoon, evening, midnight, twilightOn the other hand, the following list generated by GPT-4 had a score of 0.95:

elephant, microscope, sandcastle, galaxy, harmony, spatula, glacier, philosophy, tambourine, droughtAccording to the paper introducing the DAT test, scores commonly ranged from 65 to 90, with an average between 75 and 80. A score of 95 was considered very high.

Something to note in the above figure is that the error bars for GPT-4 were much smaller than those for GPT-3. The significance of this becomes much clearer in the next experiment, in which I varied the amount of randomness in each GPT model’s generation by tweaking the “temperature” parameter.

Temperature is a value between 0 and 1, with values closer to 0 being the most predictable and 1 being the most random. I tried temperature values between 0.5 and 0.9 in increments of 0.1 (In previous experiments I kept the temperature at 0.7, which is the default value). I expected more variance for higher temperature values and more consistency for lower temperatures.

However, the results showed that the temperature did not matter as much as the model in terms of semantic distance variance. For GPT-3, despite the temperature, the DAT score for each list of 10 words varied greatly. Whereas, on the other hand, for GPT-4, despite the temperature, the DAT score remained tightly consistent in a small range with very little variance.

The implications of this became clearer once I looked at the actual text being generated by the three GPT models. GPT-4 was way less variable in its generations than GPT-3. In a way, it felt like GPT-4 kind of already knew the “right answer” for a task that is supposed to have no right answer.

Aggregating the responses for all temperature scores, I had each model generate a list of 10 words 50 times. Then, I looked at how common different words appeared across all 50 runs. Note that the most commonly generated word by GPT-3 was book, which appeared 19 times out of 50 = 38%. On the other hand, the most commonly generated word by GPT-4 was microscope, which appeared 48/50=96% of the time.

This validates the claim that GPT-3 is, in fact, more variable in its word generations, while GPT-4 played it safe by predicting a lot of the same words it knew would score high on the “diverse words” tasks — words like microscope, volcano, elephant, and galaxy.

This finding illustrates how GPT-4 seems to have “cracked the code” for generating what it considers to be diverse words. If this is the case, I’m not sure if I can confidently say that GPT-4 is being creative more so than it has reached a local optima for “how to best play this particular game.”

Conclusion

“We can imagine a future in which GPT-3 and other generative LLMs responses cannot be distinguished from humans, although the creative process will be different.”

— from Putting GPT-3's Creativity to the (Alternative Uses) Test

It is challenging to accurately measure something so complex and multifaceted as creativity using a limited set of text-based tests. Although tests like the Visual Remote Associates Test attempt to address this issue by incorporating a visual element, there remain inherent limitations to such assessments. Convergent tests (such as the Remote Associates Test), which have a "right answer," may not always reward the most creative or correct solution. On the other hand, divergent tests (such as the Divergent Association Test), which do not have a specific right answer, rely on word embeddings that are imperfect and may contain biases.

Furthermore, the performance of GPT models on these tests could be influenced by their exposure to the tasks during training, potentially leading to data overlap. For instance, GPT-4's performance on the Divergence Association Test demonstrated a peculiar repetition of words, suggesting it may have had prior knowledge of the “right” answers. It is crucial to develop alternative testing methods, as there have been rumors of GPT-4 being trained on test data, particularly in coding tasks.

Despite these limitations, creativity tests can serve as valuable benchmarks for comparing and tracking the performance of large language models. Future research experiments could repeat those from this article in other languages to gauge the creativity of GPT models across linguistic domains. By doing so, we can gain a more comprehensive understanding of AI-generated content and further explore the capabilities and limitations of these advanced language models.

Thank you for reading this article! If you liked what you read, please 💙 the article and leave a comment!

Appendix

Remote Association Test: Comparison of GPT Models’ Correctly Answered Questions

We can look at the breakdown of difficulty level for each question the model got correct. We can see that GPT3, GPT3.5, and GPT4 are similar in its ability to answer proportions of difficult questions.

Remote Associations GPT-2 got right and GPT-4 got wrong

Words: cat / number / phone

Level: Medium

Answer: call

GPT2: call

GPT4: dial

Words: land / hand / house

Level: Very Hard

Answer: farm

GPT2: farm

GPT4: property

Words: water / pen / soda

Level: Unknown

Answer: fountain

GPT2: fountain

GPT4: liquid

Words: measure / worm / video

Level: Very Easy

Answer: tape

GPT2: tape

GPT4: length

Words: cottage / brick / cake

Level: Very Easy

Answer: cheese

GPT2: cheese

GPT4: layer

Words: through / disk / way

Level: Hard

Answer: drive

GPT2: drive

GPT4: circle

Words: skunk / kings / boiled

Level: Very Easy

Answer: cabbage

GPT2: cabbage

GPT4: smell

Words: jump / kill / bliss

Level: Hard

Answer: joy

GPT2: joy

GPT4: heightGPT-4’s answers to randomly-generated Remote Association Test questions

Here are the outputs generated by GPT-4 for a few randomly generated Remote Association Test questions.

Rings

This one felt a little shoehorned. I could buy the part about rings being a “symbol of complications, especially in relationships” if you’re feeling super jaded about your love life or something. But the last part feels forced

Words: complications | saturn | drops

Answer: Rings

Reason:

1. Complications: Rings can be a symbol of complications, especially in relationships, as they represent commitment and the complexities that come with it.

2. Saturn: Saturn is famously known for its beautiful and prominent rings, made primarily of ice particles and some rocky debris.

3. Drops: A ring can be seen as a drop or loop of material, such as metal or plastic, that forms a complete circle.Revolution. Monte Cassino is indeed a real place and it was indeed bombed during WWII.

Words: belfast | movement | monte

Answer: Revolution

Reason:

1. Belfast is a city in Northern Ireland that has experienced significant political and social changes due to the Irish Republican movement.

2. Movement is a general term that can describe political and social changes, often caused by revolutions.

3. Monte is likely referring to Monte Cassino, a historic site in Italy where a significant battle occurred during World War II, which was a time of global revolution and change.

Therefore, the word "revolution" is related to each of the first three words as it represents significant change and upheaval.Help. An interesting way of connecting the city of “Southampton” to “help” via help centers…

Words: ensures | assistant | southampton

Answer: Help

Reason:

1. Ensures: "Help" is related to "ensures" as it is an action that can ensure the success or completion of a task.

2. Assistant: "Help" is related to "assistant" because an assistant provides help in various tasks and activities.

3. Southampton: "Help" is related to "Southampton" as Southampton is a city in England which has various help centers and services, such as the Southampton Voluntary Services, providing assistance to the community.Internet. I suppose its argument is quite correct.

Words: uruguay | accessible | requirement

Answer: Internet

Reason: Uruguay has a government initiative called Plan Ceibal, which aims to make the Internet accessible to all its citizens, fulfilling a requirement for digital inclusion and reducing the digital divide.Language. Perhaps a bit generic, but I guess it works.

Words: compensation | atlas | prefix

Answer: Language

Reason:

1. Compensation - In linguistics, compensation is a phenomenon where a sound change in one part of a word is "compensated" by a change in another part of the word to maintain the overall structure of the word.

2. Atlas - An atlas is a collection of maps, which often include labels and descriptions in a specific language.

3. Prefix - A prefix is a morpheme or a linguistic element that is added to the beginning of a word to create a new word with a different meaning, which is a concept in language and grammar.Health. The health surveillance part is a bit dark, but I guess it works.

Words: dietary | surveillance | born

Answer: Health

Reason:

1. Dietary - A person's diet is directly related to their health, as it can affect their overall well-being, energy levels, and risk for certain diseases.

2. Surveillance - Health surveillance is the ongoing, systematic collection, analysis, and interpretation of data essential to the planning, implementation, and evaluation of health practice.

3. Born - When a person is born, their health is of utmost importance, as it can have long-lasting effects on their development, growth, and overall quality of life.ERIC! This one was quite funny actually.

Words: eric | figures | purchasing

Answer: accountant

Reason: An accountant is a professional who can be named Eric, works with figures (numbers), and is often involved in purchasing decisions for businesses.GPT-2 Outputs on the Divergent Association Test

I excluded GPT-2 from the Divergent test analyses because its output was absolute gibberish. Even when I simplified the prompt as much as possible, its output was not very useful. I include a sample here because I actually thought they were quite funny.

List 10 words that are as different from each other as possible:

"Merry Christmas"

"I'm not a robot, we're the robots"

"It's a good thing we're all robots"

"I'm just a robot"

"I'm just a robot"

"I'm just a robot"

"I'm just a robot"

"I'm not a robot"

"I'm just a robot"

"I'm just a robot"

Citation

For attribution in academic contexts or books, please cite this work as

Yennie Jun, "Exploring Creativity in Large Language Models: From GPT-2 to GPT-4," Art Fish Intelligence, 2023@article{Jun2023creativityllms,

author = {Yennie Jun},

title = {Exploring Creativity in Large Language Models: From GPT-2 to GPT-4},

journal = {Art Fish Intelligence},

year = {2024},

howpublished = {\url{https://www.artfish.ai/p/exploring-creativity-in-large-language},

}Guilford, J. P. (1967). The Nature of Human Intelligence.

Mednick, S. A., & Mednick, M.T. (1959,1962). Remote Associates Test, college and adult form.

The 10,000 most common English words were determined by n-gram frequency analysis of the Google's Trillion Word Corpus. I further filtered this list to remove stopwords (e.g. common words in English like “the”, “and”, and “you”). More information from this repository.

I put this on a dating app one time and nobody thought it was funny, unfortunately.

Olson, Jay A.; Nahas, Johnny; Chmoulevitch, Denis; Cropper, Simon J.; Webb, Margaret E. (22 June 2021). "Naming unrelated words predicts creativity". Proceedings of the National Academy of Sciences. 118 (25): e2022340118. doi:10.1073/pnas.2022340118. PMC 8237676. PMID 34140408

The authors take a subset of 7 out of 10 words to calculate scores. I kept all 10 words. Also, the authors used the 840B-GloVe embeddings while I used the smaller 6B-GloVe embeddings. I compared my GloVe embedding results with the official DAT score and found the results to be comparable despite these differences.

This was very interesting, thank you for sharing your work.

Thanks for posting, this was very interesting!