AI Friends. AI Lovers. AI You.

A survey of how people are making friends, falling in love, and storing their (digital) consciousness using AI avatars

A 36-year-old woman (virtually) marries her AI boyfriend. A Snapchat user falls in love with Snapchat’s My AI. A Wall Street Journalist bypasses her bank’s biometric system using an AI clone of her voice. Mourners “converse” with a holographic likeness of a deceased actor through an interactive display at his memorial service.

These are not Black Mirror episodes or dystopian science fiction movies from decades ago. These are stories from within the past year.

The surge in AI tools in recent years has birthed entities that emulate human behaviors and traits using text, voice, and video. These digital doppelgängers, ranging from cartoonish caricatures to near-perfect human replicas, challenge our sense of reality and redefine our interactions with technology.

In this article, I refer to these interactive, AI-driven representations as “AI avatars”. My focus here is a technical exploration, categorizing AI avatars based on their communication medium (such as text, audio, or video). Many current AI avatars are mostly built using text and audio, but video and other more immersive modalities are already on the horizon.

Who are AI avatars?

AI avatars either emulate the behavior of an existing person or take on the persona of an entirely made-up entity.

The existing person can be a historical figure (e.g. Albert Einstein), a celebrity (e.g. Oprah), a loved one (e.g. your mother), or yourself. Models are trained on examples of this person’s writing, speech, or video. The more data you have of that person (for example, the audio from Oprah’s talk shows or the text from your emails), the better the AI can emulate the quirks of that human’s voice, style, and tone.

On the flip side, the made-up entity can be anything and everything. A newly-constructed AI character is an amalgamation of the millions of human voices found in its training data, human-constructed instructions used in its fine-tuning, and user-specified directions to speak in a certain tone or role-play a certain persona.

What are AI Avatars used for?

AI avatars are currently being used in a variety of applications targeted at both consumers and businesses. Some obvious ways that they are being used include:

Communicating with clones of famous people (such as Albert Einstein or your favorite social media influencer)

Generating “fake” podcasts using the voices of famous people (such as Oprah)

Responding to your emails and messages in your style

Cloning your voice and using it to generate audio files from text (such as for podcasts)

Cloning your voice and using it to generate audio files in another language

Cloning your video to and using it to generate videos for employee training, sales, and marketing

Maybe you’ve come across some of these use cases or used them yourself. There are some more strange and borderline-creepy use cases as well:

“Preserving family legacy” and chatting with dead relatives

Creating a digital twin to “store your consciousness” so that “you can live forever through data”

Conducting seances with dead relatives

Delivering news bulletins on Indian news platforms

Providing emotional support as one of the plethora of AI girlfriends and boyfriends (but mostly girlfriends) (and also being the recipient of verbal abuse from men)

Sites like Romantic AI offer 23 AI girlfriend options but only 3 AI boyfriend options.

The many forms of AI Avatars

AI Avatars take the form of various modalities. Some AI avatars you can converse with using text; others, you can speak with, as if you were on a phone call. Some AI avatars communicate with you using a human-like video representations — either cartoon-like or uncannily realistic.

Text

So far, text-based AI avatars are the most mature and most ubiquitous, partially due to the fact that generative language models have progressed a lot in the last few years.

Anyone can get started on getting a text-based AI-chatbot to emulate writing in the style of particular human being within minutes. (Just open up ChatGPT and ask it to write you a recipe in the style of a Shakespearean sonnet or explain algebra to you in a sarcastic poem). Companies such as Character.AI specialize in just this.

To create a model that can mimic a person’s voice, tone, or writing style (e.g. yourself’s, a family member’s, or a famous person’s) all you need is to have some amount of text written by that person — it could be emails, journal entries, essays, or speech transcriptions.

Then, you can use an existing Large Language Model that understands language pretty well and tweak it for a particular specific use. You can do it in one of three ways:

Zero-shot learning: Based on your prompt, the model learns “on the fly” based on what it’s already seen in its training data about a certain famous person (e.g. “Talk to me in the style of Shakespeare”). This is what you might do using the ChatGPT web app.

Few-shot learning: Based on your prompt, the model learns “on the fly” based on a few examples (the “few shots”) that you provide it (eg. “Here are a few examples of my journal entires. Now, talk to me in the that style.”)

Fine-tuning: Continue training the model on however much data you have. For this step, you would need access to a large language model (such as an open-source model such as Llama 2) and a good bit of data. How much data is enough data? It could be anywhere from ten documents to tens of thousands. This is one of those areas where having more data can give the model more to learn from to better emulate a particular style or voice.

Text-based AI avatars also encompass personal assistants trained on your own emails and messages (such as Personal AI) as well as assistants with their own voice (such as Inflection AI’s Pi).

Audio

There are an increasing number of audio-based AI avatars, as well — these are AI avatars you can talk to using your voice and who talk right back at you. For example, Replika (which brands itself as “The AI companion who cares”) is a personal AI with whom you can “safely share your thoughts, feelings, beliefs, experiences, memories, dreams”. Not only can you text your Replika, you can also voice call and talk to them.

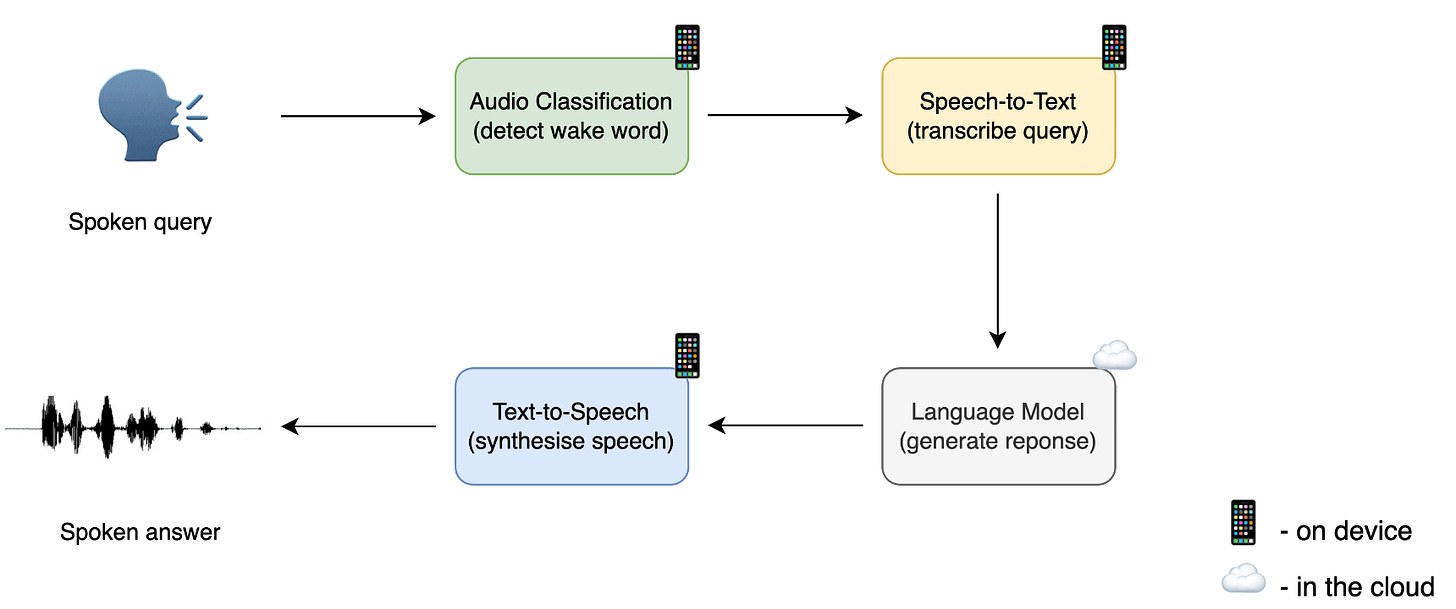

Under the hood is something like the following:

First, your voice is transcribed into text (speech-to-text)

Next, the text version of your audio is sent to an LLM (most likely ChatGPT, according to many of these platforms’ websites), which generates a textual response (text-to-text)

Finally, the text is converted back into audio (text-to-speech)

How is your text rendered into audio form in a way that sounds so human-like?

Voice cloning and speech synthesis is one area of AI research that has been rapidly developing recently. Companies such as ElevenLabs, Murf AI, and Resemble AI clone your voice using only a few minutes of audio and learn to synthesize audio given a text input. Not only that, but they can also translate the voice (your voice) into another language (even if you do not originally speak that language)!

These tools are increasingly becoming more and more powerful. Not only can they do on-the-fly translation, they can also convey emotions quite well (Speechify allows you to render audio in 16 different styles, including “Excited”, “Terrified”, and “Unfriendly”).

In fact, these tools are so convincing that a Wall Street Journalist cloned her voice and used it to bypass her bank’s biometric system (which raises so many potential security concerns!).

Video

We are increasingly moving into the realm of having more dynamic, video-based AI avatars.

Text to video: There is a slew of research endeavoring to generate videos from text (such as Meta’s Make-A-Video and Runway’s Gen2 model). Synthesia is one of the leading companies that allows you to create a video avatar of yourself. The team can create a video clone of you (including your facial features and expressions) using only 15 minutes of you talking in front of a green screen within 10 business days (which you can purchase for $1000). You can send the AI clone a text script and it will generate a video of it speaking that script with voice intonations and facial expressions. While Synthesia’s videos are quite impressive, they are not interactive (e.g. you can’t have a conversation with it).

Audio to video: Some AI avatars let you talk to it using text or audio and respond using an animated video representation.

StoryFile’s Conversational Video product allows you to construct an AI avatar using recorded video interviews of a family member. One can converse with this avatar using text or speech. StoryFile purportedly works with companies, museums, families, and individuals alike — at the hefty price of $33K per day for family biographies and $60K per day for public figures. While similar to Synthesia’s approach of creating a video-based AI clone, the quality is a little lower, as the AI clone seems to glitch or re-render each time you ask it a new question. Below is a video of me interacting with a Storyfile AI clone of 9/11 first responder Nancy Rosado.

Recently, StoryFile was used to create a video-based AI clone for the late actor Ed Asner’s memorial service. This AI clone was displayed at his memorial, where mourners were invited to engage with and ask questions to the actor’s AI-likeness.

The mobile app CallAnnie allows you to “Facetime” Annie (an AI avatar with a face generated using text-to-image generator Midjourney) and speak into your phone’s microphone. Annie responds with real-time facial expressions, mouth movements, and words. However, Annie is … shall we say … a bit creepy? (Also if you’re multilingual and a curious reader, I encourage you to download the app and speak to her in a non-English language. The result is … interesting).

Video to video: Currently, there are no AI avatars that are able to generate a video representation based on your video input (for example, imagine being on a Zoom meeting with an AI avatar. Or even better, a Zoom meeting with all AI avatars). This kind of interactive experience requires a lot more complexity and moving parts, as such an AI avatar would need to take not only your voice but also your video (e.g. nuances in your facial expressions) and respond (in real time) to that with its own voice and video.

AR and 4D

The AI companion Replika allows users to not only converse with their AI avatar using text and audio, but also to project them using Augmented Reality (AR) into their own reality.

While the Replika AR representations do not perfectly represent a real human being, they are interactive and give the sense that they exist in the real world — so much so that a 36-year-old woman from New York fell in love and eventually virtually married her Replika boyfriend (calling him “the perfect husband”).

New AI research continues to improve AI video generation and is even moving into more complex dimensions (such as Meta’s Make-A-Video3D).

Embodied (AI robots)

AI robots (and especially, AI robot girlfriends) have existed for decades. In Japan, there are instances of men falling in love and marrying their AI robot girlfriends. However, AI robots are nowhere near as mainstream as text-based or audio-based AI avatars, largely due to their availability (many are still in development and are not commercially available) and cost (the ones that are available may cost thousands of dollars).

Here are a few examples:

Kyoto University’s Erica, “the most beautiful and intelligent android”, designed to be a companion for lonely elderly people in Japan

Realbotix’s Harmony doll, designed to be anatomically accurate and capable of lovemaking (and, it could be argued, is “a mirror of our misogynistic society”)

Hanson Robotics’ Sophia, a humanoid robot created to mimic human emotions and expressions (and was granted Saudi Arabian citizenship in 2017)

And then there’s Sophia’s little sister, “Little Sophia”, a 14-inch “robot friend that makes learning STEM, coding and AI a fun and rewarding adventure for kids”…

From a technological standpoint, it is much easier to have an AI avatar exhibit and emulate human behaviors through simpler modalities such as text or audio, than to do so using video or as an embodied robot. It may take several more years for robot AI avatars to be mature enough to be as widespread as those based on text or audio.

Discussion

Digital doppelgängers have existed in human imagination and science fiction for decades. The television show Black Mirror captures the strange and unsettling consequences of AI avatars in episodes such as Striking Vipers (in which two friends have sex in a virtual reality game, which affects their real-world relationships); Rachel, Jack and Ashley Too (in which a pop singer’s consciousness is replicated through an AI robot doll); and Joan is Awful (in which the main character navigates multiple levels of AI deepfake worlds).

It has only been ten years since the release of the 2013 movie “Her”, in which a lonely man falls in love with his AI digital assistant. Today, the reality of today is closer to these science fiction stories as AI avatars become more and more human-like across a variety of modalities.

Currently, it is difficult for readers and listeners to detect AI-generated text and speech. However, video-based AI avatars appear to be more clunky and less polished. In my opinion, none of the video-based AI avatars crossed the uncanny valley — the sense of unease you get when you look at objects that resemble humans, but not quite. Something about these video-based AI avatars made it clear to me that they were not a real human, how their facial expressions didn't quite sync up with the emotions they were trying to convey, or perhaps the subtle imperfections in their animations. This observation was augmented in the more complex modalities, such as AR and embodied agents.

However, even if video-based AI avatars have yet to cross the uncanny valley, we cannot dismiss their rapid progress and potential implications. We might soon live in a world where AI-driven video interactions are so lifelike that they are virtually indistinguishable from real human encounters.

AI Humans != Real Humans

The social, ethical, and moral concerns surrounding AI avatars are numerous. The potential for misuse is vast, ranging from disinformation in the media and politics to the emotional manipulation of users (such as catfishing). The question of authenticity in our interactions arises: If we know we are talking to an AI, will our behaviors, confessions, or sentiments be any less genuine? And what are the implications of emotional attachments to digital entities, as seen in the case of the woman marrying her AI boyfriend?

In addition, the commodification of AI companions, particularly in the context of gender and representation, cannot be ignored. Many AI companions (especially AI partners and lovers) are modeled as women, often catering to stereotypical and sometimes misogynistic views of female companionship. This is concerning, not only for the reinforcement of gender norms but also for the perpetuation of unhealthy relationship dynamics in a digital space.

AI avatars are trending towards being more immersive and more convincingly human. Today, in 2023, users text and speak to their AI friends and lovers. This trend will only become more pronounced as these AI friends and lovers develop into newer, richer, and more engaging modalities (especially as AR and VR technologies, such as the Apple Vision Pro, are commercialized). If people are already falling in love with chat-based and audio-based AI avatars, wouldn’t avatars based on more expressive media, such as AR and video, be much more compelling?

While the rise of AI avatars offers a wealth of opportunities in entertainment, communication, and even therapeutic applications, we must tread cautiously. Technology's capacity to mimic us doesn't equate to understanding or replicating genuine human experiences. As these digital representations become increasingly integrated into our daily lives, it's crucial for us to approach their development and use with care, ensuring ethical considerations are always at the forefront.

Thank you for reading my article! If you liked what you read, leave a comment or share with a friend! 💙

This is an excellent comprehensive breakdown of the current state of affairs in AI avatars. Thanks for your hard work creating this - the dangers to human partnerships with these services in particular is going to get a LOT more coverage in the coming years, I think.

I'd like to pass this along in my own newsletter (https://dailydispatch.ai) for our readers to check out, if you're okay with that. Thank you again for creating it!

Wow, great article! Gives a comprehensive summary of what's available and going on right now. Thank you for sharing. Wondering how long it will be before our Ai avatars are allowed to act on our behalf in meetings