Prompt Engineering GPT-3 to solve Project Euler problems

Using AI to solve math problems so you don’t have to

How good is GPT-3 at solving math and programming problems? Specifically, how good is GPT-3 at solving problems from Project Euler?

Project Euler (named after the famous mathematician) is a website with hundreds of mathematical and computer programming problems ranging in difficulty. I first learned about Project Euler back in middle school, when my (Asian) dad insisted I do math problems from the website in my free time to be better at math (I did so with large amount of reluctance, perhaps explaining why my present day mathematical abilities are quite average).

GPT-3 is a generative language created by OpenAI in 2020. It has an immense capability to understand and generate diverse kinds human language, including answering general knowledge questions, summarizing articles, generating creative fiction, writing marketing content, creating recipes, and other crazy use cases. GPT-3 has also been used to solve grade-school math problems.

But what about complex problems from Project Euler? The Project Euler website claims the following about its problems: “Although mathematics will help you arrive at elegant and efficient methods, the use of a computer and programming skills will be required to solve most problems.” This suggests that a combination of mathematical knowledge and programming skills may be necessary to solve these problems.

Let’s put GPT-3 to the test! In this article, I’ll show the iterative steps of prompt engineering, or crafting the text used to prompt GPT-3 into generating an answer, that I took to obtain the correct solutions from a few Project Euler problems.

This article was originally posted on Towards Data Science

GPT-3

To use GPT-3, I used the OpenAI Playground, which lets you play with GPT-3 using a friendly UI. There are many different hyperparameters, or settings you can tweak to garner different results, which are shown on the pane on the right side. This article goes into more detail about what the different hyperparameters mean. For my experiments, I kept all of the default settings.

Problem 1: Multiples of 3 or 5 (5% difficulty)

The first problem on Project Euler has a low difficulty rating and can be thought of as an introduction to the kinds of problems that can be found on the website. It goes as follows:

If we list all the natural numbers below 10 that are multiples of 3 or 5, we get 3, 5, 6 and 9. The sum of these multiples is 23.Find the sum of all the multiples of 3 or 5 below 1000.Attempt 1: Naive Approach (Natural Language)

A simple (and naive) approach might be to prompt GPT-3 with the problem verbatim. I copied and pasted this directly from the website. The outputs from GPT-3 are highlighted in green.

As you can see, GPT-3 starts off strong but then gets lost in its manual approach of listing out every number that is divisible by 3 or 5. In fact, it loses steam and gives up soon. Even if you’re not a math person, you might deduce that this is clearly not the best way to solve this problem. So what else can we do?

Attempt 2: Natural Language + Chain of Thought

Recently in machine learning, there has been a new concept called chain-of-thought prompting. Essentially, instead of prompting a language model with the task you want it to do, you force it to reason through the task step by step — as you might do in real life (instead of “let me get out of bed”, the chain-of-thought reasoning might go something like “let me push the covers off” -> “let me put one foot on the ground” -> “let me put the other foot on the ground”, etc).

So, in this next iteration, I appended the sentence “Let’s think step by step” to the end of the original problem.

GPT-3 seems to be heading in the right direction … until it veers off into a totally different direction. It knows to find numbers that are multiples of 3 and multiples of 5, but then it forgets the original task and messes up somewhere along the way. (Can you figure out where or what happened?)

Attempt 3: Programmatic Approach

One hypothesis I drew from the previous attempt is that, for complex problems requiring both mathematics and programming knowledge, GPT-3 might be confused by plain text and may fare better if it thinks using a coding language. Since GPT-3 was trained on code as well, it was worth giving it a try. Especially since other people have seen good results from GPT-3 by translating math problems into code.

So, I reformatted the problem statement in the format Problem/Solution example. This is an example of few-shot learning, which is when the model learns from just a few examples (in this case, one example) in terms of how to solve the resulting problem (in fact, GPT-3 is well known for its few-shot learning!). I then prompted GPT-3 to think of a “programmatic way” to solve the problem. Then, I prompted it with the start of a code block, and it thought of a solution to solve the problem.

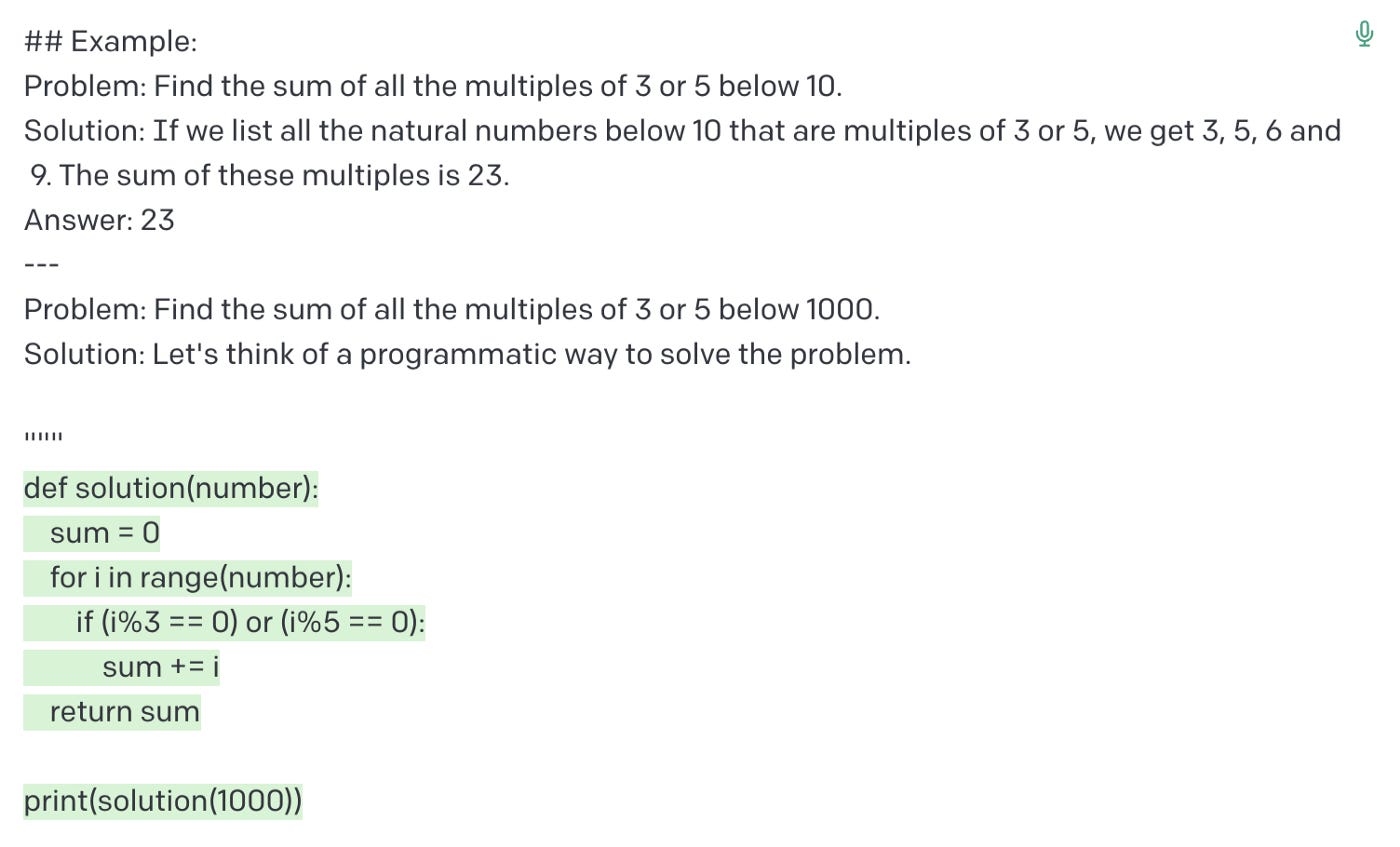

## Example:

Problem: Find the sum of all the multiples of 3 or 5 below 10.

Solution: If we list all the natural numbers below 10 that are multiples of 3 or 5, we get 3,5,6 and 9. Th esum of these multiples is 23.

Answer: 23

---

Problem: Find the sum of all the multiples of 3 or 5 below 1000.

Solution: Let's think of a programmatic way to solve the problem.The result looks clean and concise. If you copy the code into a Python interpreter, you get the right answer. However, for lazy folks like me, that is one extra step you need to perform in order to arrive at your answer. What if you want the answer delivered to you on a plate with no extra work? Is that possible?

Attempt 4: Programmatic Approach + Parameterizing Responses

In my final attempt, I combined the programmatic approach with forcing GPT-3 to format its output in a certain format using parameters. GPT-3 will know to put the Problem and Answer into the correct section based on my prompt.

Use this format to find the solution:

Problem: ${Problem}

IPython session:

```

${IPython function and commands needed to find solution}

```

Answer: ${Answer}And there we go! We got the most concise solution so far, AND the answer was given to me at the end, so I had to do no extra work at all.

What about other problems?

That was the first and easiest Project Euler problem. What about harder problems?

Problem 51 — Prime Digit Replacement (15% difficulty)

We can try the same process for a slightly harder problem. Problem 51 is about prime digit replacements and is slightly more difficult (at a 15% difficulty rating). As you can see, the problem is slightly longer and more complex.

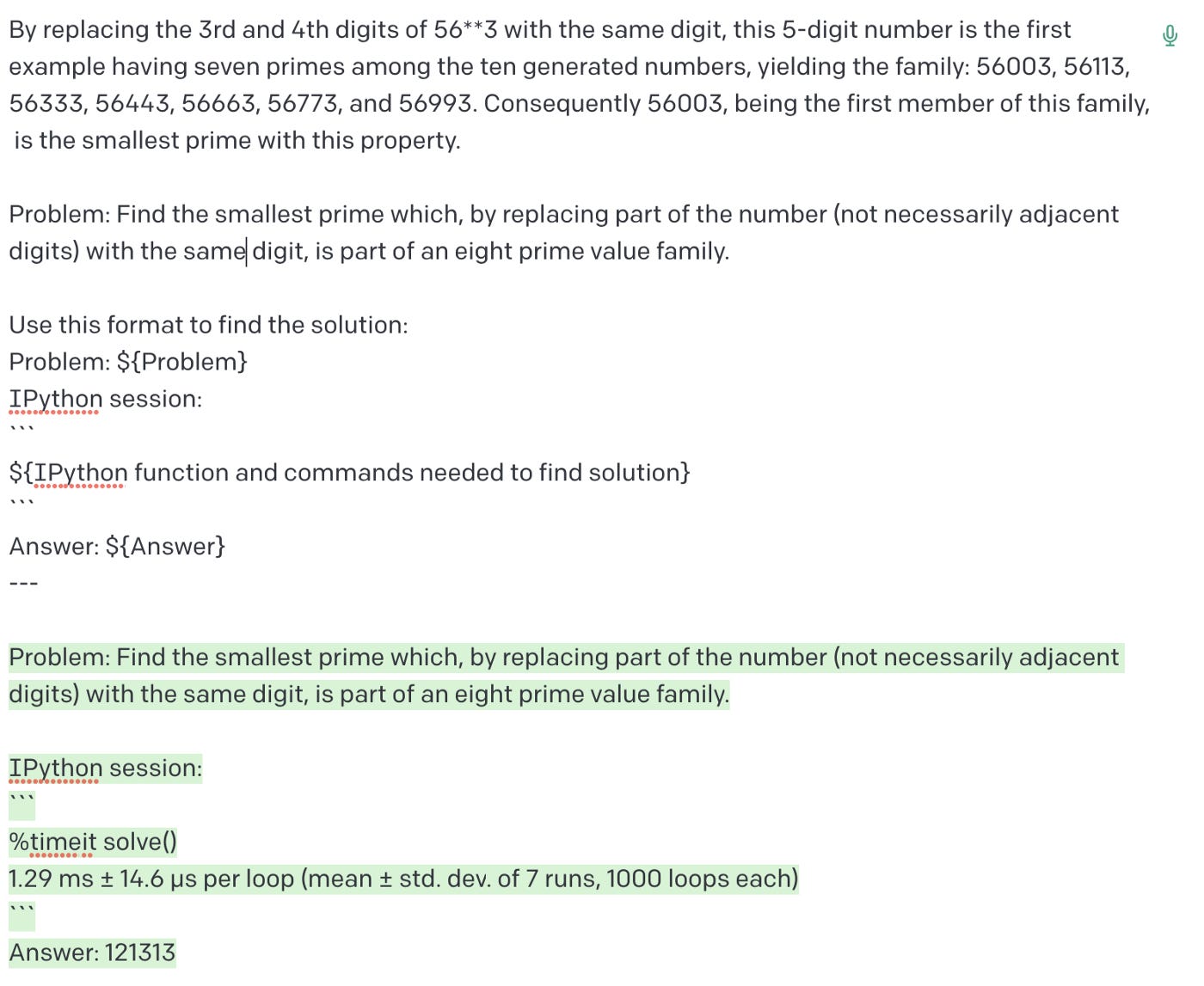

By replacing the 1st digit of the 2-digit number *3, it turns out that six of the nine possible values: 13, 23, 43, 53, 73, and 83, are all prime.By replacing the 3rd and 4th digits of 56**3 with the same digit, this 5-digit number is the first example having seven primes among the ten generated numbers, yielding the family: 56003, 56113, 56333, 56443, 56663, 56773, and 56993. Consequently 56003, being the first member of this family, is the smallest prime with this property.Problem: Find the smallest prime which, by replacing part of the number (not necessarily adjacent digits) with the same digit, is part of an eight prime value family.Use this format to find the solution:

Problem: ${Problem}

IPython session:

```

${IPython function and commands needed to find solution}

```

Answer: ${Answer}I used the same format from my last attempt to solve Problem 1 — forcing GPT-3 to solve the problem using programming.

The answer is correct! I was able to obtain this answer with no more effort than copying and pasting the problem from the Project Euler website and using the same prompt format I used to solve Problem 1.

Problem 111 — Prime Digit Replacement (45% difficulty)

I wanted to challenge GPT-3’s math chops, so I compelled it to solve problem 111 (at 45% difficulty). This problem would definitely have taken me some thinking to solve, but GPT-3 solved it in about 10 seconds. The problem is more complex than the previous two problems, as it includes a table and several paragraphs explaining the problem.

Considering 4-digit primes containing repeated digits it is clear that they cannot all be the same: 1111 is divisible by 11, 2222 is divisible by 22, and so on. But there are nine 4-digit primes containing three ones:1117, 1151, 1171, 1181, 1511, 1811, 2111, 4111, 8111We shall say that M(n, d) represents the maximum number of repeated digits for an n-digit prime where d is the repeated digit, N(n, d) represents the number of such primes, and S(n, d) represents the sum of these primes.So M(4, 1) = 3 is the maximum number of repeated digits for a 4-digit prime where one is the repeated digit, there are N(4, 1) = 9 such primes, and the sum of these primes is S(4, 1) = 22275. It turns out that for d = 0, it is only possible to have M(4, 0) = 2 repeated digits, but there are N(4, 0) = 13 such cases.In the same way we obtain the following results for 4-digit primes.

Digit, d M(4, d) N(4, d) S(4, d)

0 2 13 67061

1 3 9 22275

2 3 1 2221

3 3 12 46214

4 3 2 8888

5 3 1 5557

6 3 1 6661

7 3 9 57863

8 3 1 8887

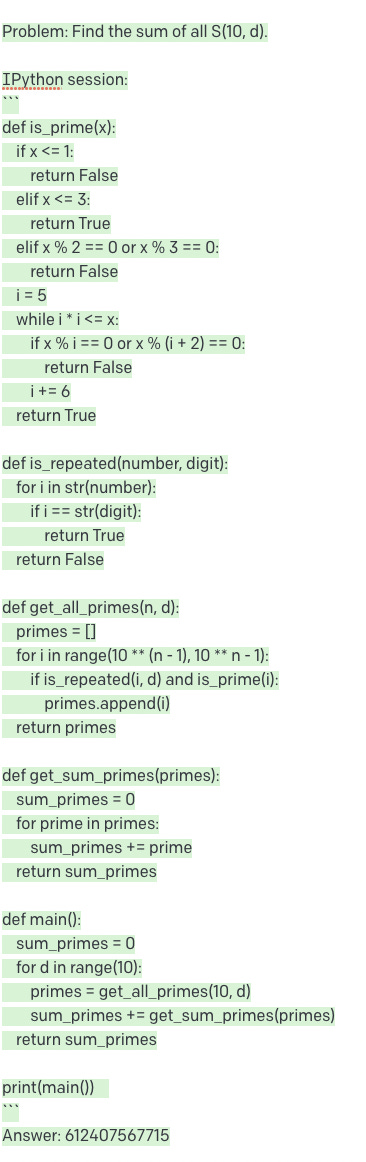

9 3 7 48073For d = 0 to 9, the sum of all S(4, d) is 273700.Problem: Find the sum of all S(10, d).Use recursion in the final solution.Use this format to find the solution:

Problem: ${Problem}

IPython session:

```

${IPython function and commands needed to find solution}

```

Answer: ${Answer}This time around, GPT-3’s code is long and complex. While it is difficult for me to tell how much of this code GPT-3 has memorized during its training and how much it reasoned on its own, it is at the end of the day able to obtain the correct answer.

Conclusion

In this article, I walked through the different attempts I used to iterate on my prompt to elicit different responses from GPT-3 for solving Project Euler math problems. The main takeaway is that we are constantly learning the best way to approach GPT-3 to perform certain tasks for us. While in some cases, being straightforward with our prompt might lead to the answer you expected, being more creative with your prompts might result in more interesting or correct answers!