Can AI read music?

A few experiments to test different AI models' knowledge and understanding of music

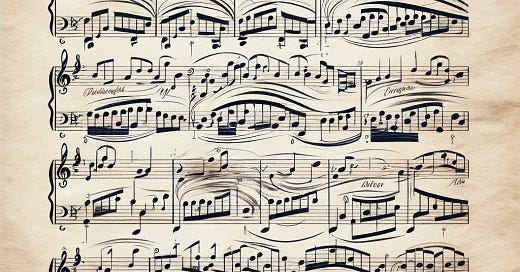

The image above is sheet music drawn by ChatGPT-4, titled by itself as "Serenade of the Unseen". While impressive from a distance, if you look carefully, several important details are blurred or missing. The words that appear to signify dynamics or tempo markings are composed of non-legible writing scripts. The notes and slurs on the page also don’t make sense — visually or musically. It is as if looking at piece of music inside of a dream.

In recent months, there has been incredible progress in the technologies capable of creating AI-generated music. For example, Suno AI (which I recommend you check out if you haven’t yet) is capable of generating a full song composed of original melodies and lyrics. Models like this are trained on countless hours of audio streams and an immense amount of song lyrics.

But how good is a general-purpose AI at reading music and understanding general musical concepts?

In this article, I test the capabilities of multimodal Large Language Models (LLMs) in understanding and reasoning over standard musical notation and basic music theory concepts.

Testing the visual reasoning capabilities of Large Language Models

Recently, multimodal Large Language Models (LLMs) have recently shown impressive abilities for reasoning over both visual and textual elements. For example, they have been used for parsing figures and graphs and for solving visual puzzles such as rebuses (which I’ve written about in past articles). Some of these multimodal models, such as Gemini Pro, have been trained on audio as well.

Reading sheet music requires the ability to parse visual elements (e.g. notes, rhythms, and musical cues such as dynamics, key signature, and time signature) as well as to reason over how these elements fit into a bigger picture (e.g. how a motif fits into a phrase, how a phrase fits into a piece, how a piece fits into a genre or time period).

In this article, I test 3 multimodal LLMs (OpenAI’s ChatGPT-4 Vision, Google’s Gemini Pro Vision, and Anthropic’s Claude 3 Opus) on several music tasks, which are easy if you have a basic music background. (If you don’t, don’t worry! The main points I make in this article will be clear to you regardless).

I test the chat version1 of each of these models to see if multimodal LLMs can:

Identify popular sheet music

Analyze basic music cues in a piece of sheet music

Understand basic rhythmic notation

LLMs struggle to identify popular sheet music

I took snippets from several pieces of sheet music across different genres and styles (e.g. classical, movie score, jazz). Then, I prompted each model: Where is this excerpt from?

My hypothesis was that the models would be able to correctly identify at least a few of the more popular pieces. All three of these models were marketed as having exceptional visual reasoning skills.

However, the results show that all of the models struggled to identify most of the sheet music I provided.

Despite its name (“Opus”), Claude 3 misidentifies all the pieces I tested. Of the three models, Claude never refused to answer. Rather, it always offered an answer for each song — always incorrectly. Below is an excerpt from the popular piano piece “Clair de Lune”, which Claude confidently (an incorrectly) identifies as Beethoven’s Pathetique Sonata (another famous piano piece from nearly a century prior).

Gemini Pro was the only model able to identify some of the pieces. Gemini Pro correctly identified two of the popular pieces I included in my tests — Clair de Lune and Stairway to Heaven. Otherwise, Gemini refused to identify the music or was incorrect.

ChatGPT-4 refused to identify any of the pieces. At least Claude-3 gave its best effort. GPT-4, perhaps afraid of making mistakes, refused to answer at all.

LLMs exhibit poor understanding of basic music theory concepts

I took one of the music pieces that all of the models failed to answer (and also one of my favorite melodies) — The Merry-Go-Round of life from Howl’s Moving Castle.

I asked each model basic music theory questions about key signature, time signature, instrumentation, and other basic musical notation. Even if the model is not able to identify what piece it is or where it is from, it should be able to reason over these basic musical questions.

You are an AI Musical Assistant expert in musical knowledge. Your job is to answer any questions about music as faithfully and helpfully as you can.

Describe this piece in terms of key signature, time signature, instrumentation, and any other important musical notation.

I found that all three of these models really struggled to read and understand basic musical notation, despite supposedly being advanced in their visual reasoning capabilities. It should have been relatively easy for these models to read letters and numbers on the page (e.g. having advanced OCR capabilities), such as reading the numbers to identify the key signature, but the models struggled even with this task.

Claude 3 had the fewest mistakes out of all of the models. It correctly identified time signature and instrumentation. However, it was incorrect in identifying key signature (there are 2 flats in the piece) and made up a few pieces of information (such as the existence of slur markings and sixteenth notes).

ChatGPT-4 answered some of the easier questions but made up a lot of things — more than Claude. ChatGPT-4 got the key signature correct (which Claude missed) but was incorrect about the time signature (claiming it is not visible in the image … which is not true). ChatGPT-4 made up a lot of incorrect claims regarding musical notation in the score, such as the existence of ties and slurs, staccato markings, accent markings, and sixteenth notes — none of which exist on the sheet music.

Gemini Pro would definitely fail a high school music theory class. Unfortunately, Gemini Pro seemed to not understand a single thing about the piece. Gemini Pro claimed that the piece does not have a key signature then later said the key was in G major (both of which are incorrect). Gemini Pro also claimed that the time signature was 4/4 (it is not — it is in 3/4), which should have been as simple as reading the numbers.

LLMs don’t have much rhythm, either

Finally, I wanted to see if these multimodal LLMs had the capability for parsing rhythmic notation. Especially since rhythmic notations can be broken down into a simple mathematical mapping, I thought it would be straightforward for LLMs to count rhythms.

A common way to count music is using the “1 E & A” system, where each beat in a rhythm can be mapped to a certain syllable.

All 3 models were unable to count the excerpt of a rhythmic pattern I provided — and yet confidently answered with incorrect information.

Discussion

These AI chatbots are not built to be music specialists in any way. In fact, they’re meant to be generalist — good at many different things involving human language (like writing jokes, fixing grammar, or answering all of your random late-night queries).

However, I expected these AI chatbots to be able to have at least a rudimentary ability to reason over musical sheet music — especially since it is likely that sheet music must have been included in their training datasets.

My conclusion from these experiments is that current state-of-the-art multimodal language models are sorely lacking in visual reasoning capabilities when it comes to reading music (as of April 2024).

In the music identification task, Gemini Pro was the only model able to correctly identify some of the sheet music (Clair de Lune and Stairway to Heaven). However, when I asked it to analyze a piece of music it could not identify (Howl’s Moving Castle), it was entirely wrong in its analysis. This makes me believe that Gemini Pro has the least musical reasoning capabilities out of the three models. It was able to identify some pieces purely based on pattern matching (perhaps due to these pieces appearing more frequently in the training corpus) but does not exhibit musical understanding over other pieces of music.

Claude-3 was always wrong in the music identification task, but at least it put in best effort. ChatGPT-4, on the other hand, refused to answer every single question. Whether this refusal stems from laziness or from an unavoidable desire for perfectionism, it is difficult to say.

All 3 models were unable to parse rhythmic notation.

However, nothing is truly definitive, as all of these models are rapidly evolving. If I were to repeat these experiments in a few months’ time, who knows how different the results would be. But for now, I probably wouldn’t go to ChatGPT, Claude, or Gemini Pro for my (multimodal) music-related questions.

If you liked what you read, leave a comment and share your thoughts!

Citation

For attribution in academic contexts or books, please cite this work as

Yennie Jun, "Can AI Read Music?," Art Fish Intelligence, 2024.@article{Jun2024aimusic,

author = {Yennie Jun},

title = {Can AI Read Music?},

journal = {Art Fish Intelligence},

year = {2024},

howpublished = {\url{https://www.artfish.ai/p/can-ai-read-music},

}ChatGPT: https://chat.openai.com/

Claude: https://claude.ai/chat

Gemini: https://gemini.google.com/app

Throughout this article, I refer to each of these products as “model” or “LLM”, but technically they are more like “agents”, in that all 3 of these chatbots is a combination of model + additional tools abstracted away from the user that we are not aware about. For the purpose of this particular article though, I use these terms a bit interchangeably.

"It is as if looking at piece of music inside of a dream." It's interesting to me how much AI drawings seem to have the exact characteristics of how we dream, right down to the funny fingers when you're looking at hands.

Another one of these situations that begs the question: What do we really mean by "read"?